Meta's Innovative AI Race: Deploying Canvas Structures to Meet Soaring Compute Demand Now

Meta’s Unconventional Race: AI Servers Under Canvas as Computing Demand Soars

Unprecedented Demand Drives Temporary Solutions

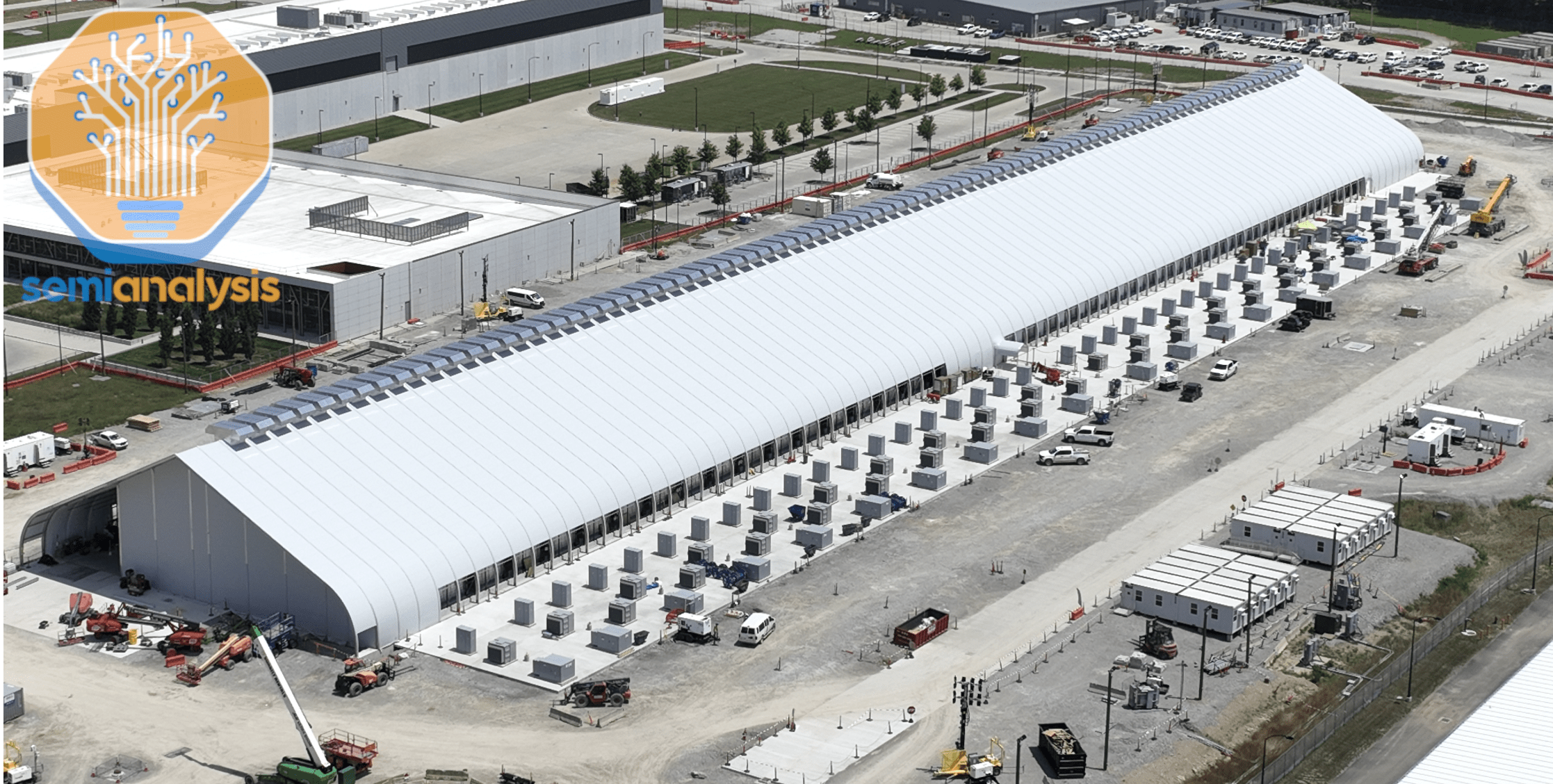

The escalating global competition in artificial intelligence has forced rapid, sometimes unexpected decisions. Nowhere is this urgency more tangible than at one of the world’s largest tech groups, which has taken the striking step of deploying cutting-edge computing equipment inside temporary canvas structures. The move highlights how unprecedented demand for advanced machine learning infrastructure is outpacing the availability of viable, permanent server housing.

The decision marks a pivotal inflection point for the enterprise, which found its traditional data centers at capacity, even as its ambitions to lead the technological revolution in generative models and supercomputing showed no sign of slowing. Deploying temporary IT facilities typically signals a crisis or surge event—yet in this case, it’s simply business as usual in the era of global AI primacy. The company’s drive to secure high-density computing reflects the fierce scramble among industry powerhouses for dominance, where delays—even by a few months—may translate into missed milestones or falling behind the competition.

Sources familiar with the rollout stress that speed is now a core metric. The latest generation of large models require more than just scalable cloud contracts or colocation deals; what’s needed is the almost-instant availability of racks, networking, and power at enormous scale. In this environment, canvas structures aren’t a step backward—they’re a calculated waystation in a high-stakes race to bring novel intelligence applications to the market as soon as possible.

Building the Future—Permanent Facilities in the Pipeline

Short-term measures rarely operate in a vacuum. Even as equipment is stood up under canvas, construction is steadily underway on a major new computing campus in the American South. This enormous facility—planned as the company’s largest digital campus—will spread across 4 million square feet on a 2,250-acre site, representing one of the single largest investments in digital infrastructure to date. When fully operational, the development is projected to reach 2 gigawatts of power dedicated to data workloads, with future plans extending capacity up to 5 gigawatts—setting new records for both physical size and projected impact for the surrounding region.

The practical implications of such a project are enormous. The site is designed from the ground up to enable the next wave of conversational agents, recommendation engines, and synthetic media tools. Unlike legacy data centers, these new builds prioritize ultra-high-density compute and the smallest possible latency, using bleeding-edge cooling methods and energy sourcing. The result: an ecosystem uniquely optimized for training modern large language architectures and scaling real-time inferencing for global platforms. National and regional leaders see the initiative as a pivotal moment for economic opportunity, job creation, and clean-energy partnerships.

While headline numbers draw attention—thousands of construction jobs, hundreds of permanent roles—the deeper significance lies in the capacity to accelerate innovation cycles. For any organization looking to harness digital transformation, the ability to rapidly deploy and iterate next-generation workloads is no longer a luxury—it’s the new baseline for survival and leadership.

Strategic Priorities: Speed, Scale, and Compute Leadership

The rationale behind these logistical pivots and colossal construction projects is rooted in the firm’s recalibrated strategic focus: speed must now come before traditional redundancies. In earlier eras, vast data facilities were built for maximum durability and with layers of contingency. Today, the imperative is clear—deploy as quickly as is safely possible, without waiting for perfect circumstances or every box to be checked. This approach allows the company to close the computing gap against established leaders in the field, restoring the ability to innovate and launch at an unrelenting pace.

Industry analysts suggest that this pivot is not about appearance or long-term prestige—it’s about gettingsufficient computational power online right now to test and train large-scale models in-house. Modular infrastructure, prefabricated cooling systems, and flexible power setups typify the new normal. Delays in new-build construction are met with stopgap installs so that internal research can continue, customer demands can be met, and feature rollouts remain on schedule.

Ultimately, the story reflects a critical chapter in the evolution of the broader machine learning industry. Every major advance in neural architecture, multi-modal reasoning, and real-time AI hinges on infrastructure able to keep pace. As core terms like hyperscaling, compute clusters, and AI model training become common currency, they also underpin the next wave of global digital transformation. The canvas-roofed deployments may be temporary, but the race for compute leadership shows no sign of slowing—and with every server that comes online, the horizons of what’s possible in artificial intelligence push ever further outward.