Inside Gitingest: How to Convert GitHub Repositories into Neural Network-Ready Text in Minutes

Transforming GitHub Repositories into Neural Network-Ready Text with Gitingest

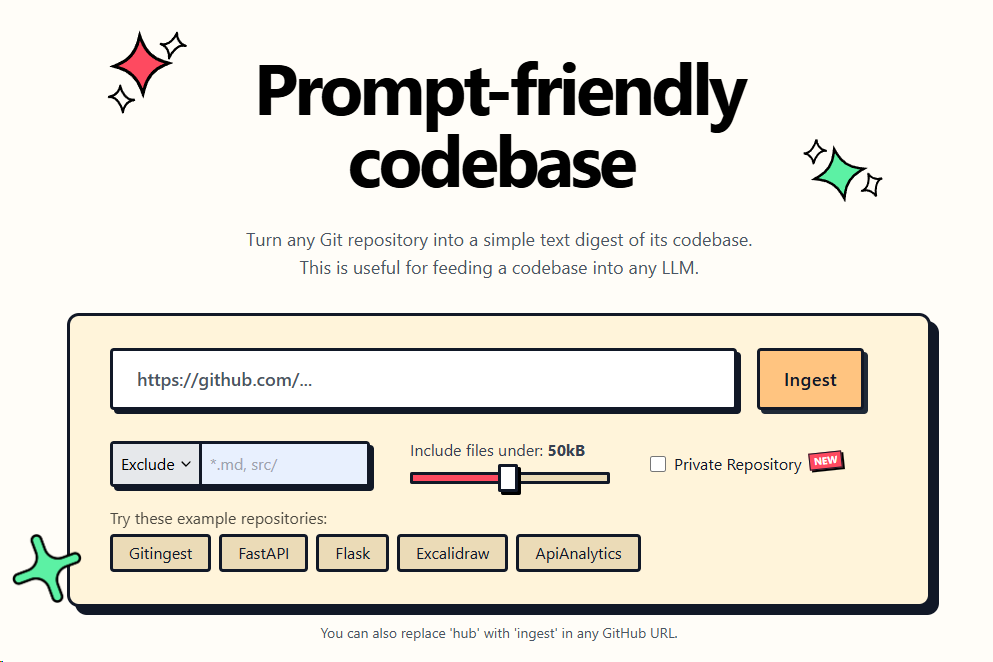

In a noteworthy development for developers and AI practitioners, the process of converting entire codebases hosted on GitHub into formats optimized for machine learning applications has been simplified with the introduction of an innovative tool. By merely inputting the URL of a repository, this technology rapidly extracts and restructures the repository content into a streamlined text format, ideal for use with advanced language models and other neural architectures.

This solution offers a significant stride in bridging the gap between complex code repositories and their utilization as training or analysis data for neural networks. It provides users with granular control to filter out files or directories that might be irrelevant or extraneous, thus ensuring the distilled dataset is both relevant and focused for computational needs.

The convenience of this approach lies in its minimal user input requirements and the automation of what would otherwise be a laborious manual extraction and preprocessing task. Users simply supply the repository address, and the rest of the transformation process is handled by the platform, delivering an output tailored for neural processing workflows.

Technical Insights and Mechanism

This advancement utilizes automated parsers capable of navigating the hierarchical directory structures typical of source code repositories. It systematically compiles the textual content—source files, scripts, and documentation—into a cohesive, digestible prompt that large language models or other machine learning systems can ingest efficiently.

One of the critical advantages of this approach is the optimization for token-based analysis, a fundamental concept in contemporary language models. The tool evaluates and reports on statistical measures such as token counts and file sizes, enabling users to gauge data volume and complexity prior to initiating any training or inference procedures.

Another compelling feature is the support for exclusion patterns. Users may specify particular file extensions or folders to ignore during compilation, preventing the inclusion of binaries, large media files, or ancillary documents that do not contribute value to AI-driven code understanding.

Additionally, the service integrates mechanisms for privacy preservation by operating offline or within controlled environments, thereby assuring the confidentiality of proprietary codebases when required.

Broader Implications and Use Cases

By facilitating the conversion of repositories into formats amenable to neural systems, this tool enhances capabilities across a spectrum of applications. These include AI-assisted code review, automated documentation generation, and the development of intelligent coding assistants capable of providing context-aware suggestions.

Moreover, the expedited generation of textual digests drives innovation in research that applies machine learning to software engineering challenges. It allows for rapid experimentation with code comprehension tasks or error pattern recognition, leveraging sophisticated modeling techniques without the overhead of manual dataset preparation.

The ease of adoption is heightened through command-line interfaces and integration options with popular programming environments, making it accessible for both individual developers and large-scale engineering teams. Open-source availability further invites community engagement for ongoing refinement and adaptation to emerging AI paradigms.

In summary, this methodology constitutes a pivotal enabler for more efficient and effective use of code repositories as input to contemporary neural networks, opening avenues for increased automation and insight generation within software development ecosystems.