How Apple SimpleFold Is Transforming Protein Structure Prediction and Biomolecular Modeling

Apple’s SimpleFold: A Game-Changer in Protein Structure Prediction

Introducing a Lean Approach to Biomolecular Modeling

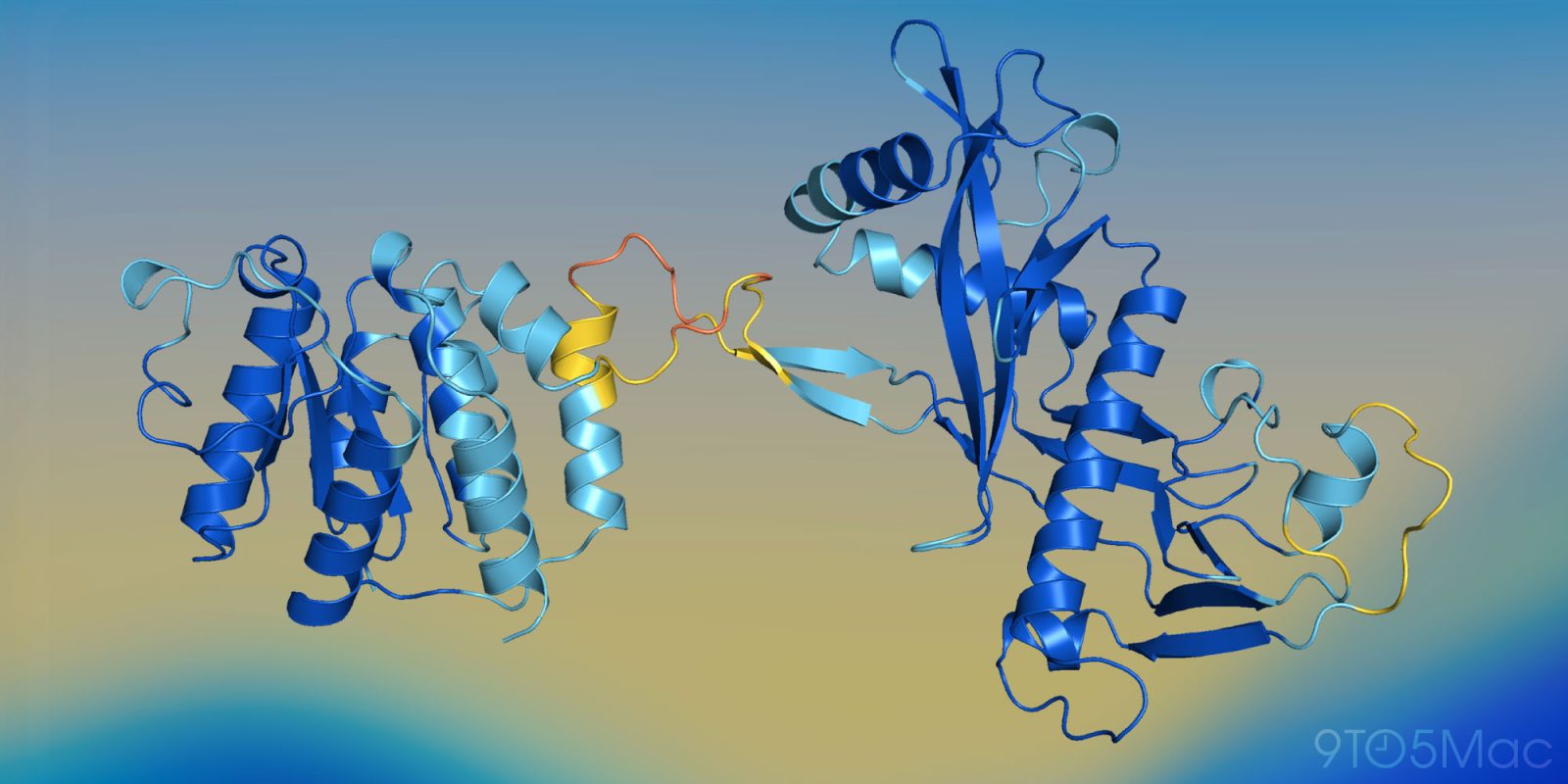

Apple has announced the launch of a new suite of computational models designed for predicting the three-dimensional configuration of proteins. Branded as SimpleFold, this family of neural networks introduces a radically streamlined approach within the landscape of biomolecular modeling. While previous solutions often relied on intricate architectures customized for structural biology, this latest development leverages a straightforward strategy built primarily from established transformer blocks—akin to those powering large language models.

The largest of these models, SimpleFold-3B, turns heads with its efficiency: it closely matches leading standards in the field, delivering approximately 95% of the predictive precision observed with widely used alternatives. Remarkably, it accomplishes this feat while demanding only a fraction of the computational power—improving efficiency by a factor of more than twenty. In parallel, a much more compact variant, with around 100 million parameters, operates over four hundred times more efficiently and manages to achieve nearly 88% of the benchmark performance. This recalibration between prediction accuracy and resource utilization could have far-reaching consequences for sectors ranging from pharmaceuticals to synthetic biology.

Behind the Scenes: Rethinking Model Architecture

The architecture underpinning SimpleFold diverges sharply from that of established systems. Earlier tools have typically employed labor-intensive mechanisms such as multiple sequence alignments and geometric constraints, effectively embedding biochemical expertise directly into their operations. Instead, the new models dispense with these bespoke modules, relying almost entirely on the attention mechanism that has propelled natural language processing breakthroughs. This decision not only slashes complexity but also positions the technology for faster scaling and broader accessibility.

The development process included rigorous testing against widely accepted benchmarks, including CAMEO22 and CASP14. These standards represent some of the most stringent evaluations of model robustness, generalization, and individual atom placement. SimpleFold’s top configuration matched or exceeded expectations, not just in raw scoring but also in terms of how quickly and smoothly the models could be deployed, even on hardware with more modest resources. The team’s results demonstrate that a general-purpose, transformer-based design is fully capable of handling protein folding tasks—challenging a prevailing assumption in computational biology.

Efficiency, Open Access, and Future Directions

One standout feature of this innovation is its broad accessibility. With codebases and model checkpoints openly released, researchers and practitioners across scientific disciplines now have the opportunity to adapt and implement solutions at unprecedented speed. SimpleFold’s universal backbone suggests broad compatibility: by mirroring the structure of popular AI models, it enables the use of common fine-tuning techniques, streamlined distillation, and more seamless integration with established workflows in allied fields.

Efficiency gains are not theoretical—smaller versions of SimpleFold can operate rapidly enough for direct application in time-sensitive pipelines, while still preserving key performance metrics. This positions the models as practical options for any laboratory or organization with limited computational infrastructure, without sacrificing scientific rigor. The strategic move away from complex, finely-tuned geometric modules points toward a future where universal bio-models—capable of handling diverse protein-related challenges—can be developed on top of a consistent, transformer-based foundation.

Simplified, Scalable, and Poised to Influence Bioinformatics

Boldly adopting a pared-back, transformer-driven methodology, Apple’s release represents a pivotal moment in the evolution of protein simulation. The streamlined nature of the models, combined with their remarkable computational economy, signals new possibilities in large-scale biological research and rapid hypothesis testing. As life sciences increasingly intersect with AI-driven methodologies, the influence of highly adaptable, efficient architectures is set to grow—enabling researchers to tackle scientific questions previously constrained by computational limitations.

For anyone invested in the progress of drug discovery, genetic research, or molecular modeling, the arrival of this accessible and power-efficient suite of AI models marks a significant milestone. By charting a course away from domain-specific complexity and toward universal, transformer-based logic, this move opens the door for a new generation of intelligent systems that can drive progress in both basic research and applied biosciences.