How AI-Driven Real-Time Sign Language Translation Is Transforming Accessibility and Connection

AI-Powered Speech-to-Sign Language Translation Breaks New Ground in Communication

Revolutionizing Accessibility with Real-Time, Two-Way Communication

A pioneering technology initiative has emerged from China, capturing international attention: a lightweight artificial intelligence system developed to translate between spoken and sign languages in real time. The project, currently in its prototype phase, is being developed by Limitless Mind—a rising player committed to dismantling communication barriers for individuals who are deaf or hard of hearing. By harnessing AI innovations designed for integration into consumer devices like smart glasses and smartphones, this technology aims to make seamless, inclusive interaction possible for a broader audience. Accessibility sits at the core of the solution, allowing users to communicate effortlessly without the need for expensive equipment or specialized training.

This innovation is more than a standard translation tool. Instead of simply transcribing audio into text, it accurately conveys whole conversations in a form that feels natural to sign language users. This distinction is crucial because a significant number of individuals with hearing loss find written scripts ineffective or burdensome. For many, sign language, replete with gestures, facial expressions, and nuanced rhythms, is their first language—their most comfortable and efficient channel for communication. Unlike many traditional solutions, this system adapts to the realities of sign language: its vocabulary, its visual syntax, and its requirement for more than just hand motions. It accounts for the intricate choreography of movements, including the tilt of the head and changes in facial expression, ensuring the message’s meaning is preserved.

Beyond Text: Recognizing the Complexity of Visual Language

Text-based alternatives have long served the hearing-impaired community, but these fall short for several reasons. Primarily, not all individuals with hearing loss are proficient readers—especially when written content does not align with their natural linguistic instincts. Furthermore, sign languages are not universal. Regional variations abound, sometimes even within the same country, making a “one-size-fits-all” approach ineffective. In sign language, the pace of signing, physical expression, and localized gestures dramatically alter interpretation. High-fidelity translation demands capturing these subtleties, not just the broad strokes of vocabulary and grammar. This underscores the imperative for tools that go well beyond audio-to-text models and engage sign language as a vibrant, expressive mode of communication.

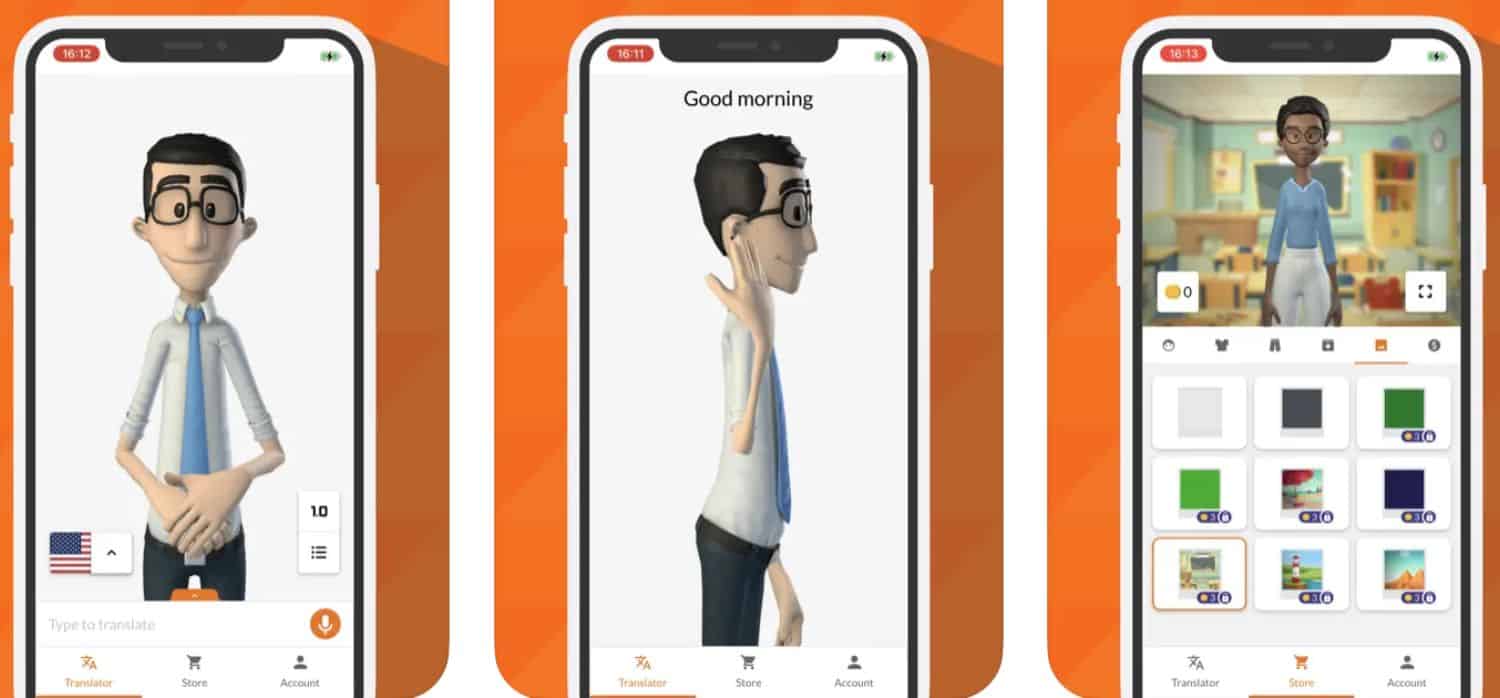

To meet these requirements, the developer amassed a comprehensive dataset consisting of approximately 12,000 annotated videos, capturing diverse sign language variations—sometimes referred to as “dialects.” Each segment of this data includes meticulously recorded 3D coordinates for hand shapes and movements, body orientation, and facial cues. This level of detail enables the AI platform to perform sophisticated recognition of real gestural input, converting live signing into readable text and, in reverse, transforming text or spoken language into accurate, lifelike signs with the help of a digital avatar. The end result is a dynamic tool that mirrors the natural ebb and flow of bilingual conversation—enabling interaction that feels authentic and immediate.

AI and the Future of Inclusive Interactions

This technological leap doesn’t stand alone in today’s evolving assistive communication landscape. Other prominent players have launched cloud-based solutions and virtual interpreters, signaling a robust trend toward mainstreaming such tools. These competitors include large technology firms utilizing cloud infrastructure for remote translation, as well as emerging models designed specifically for widely used sign languages around the world. While each solution offers unique technical features, the convergence of AI, gesture detection, and digital avatars marks a new standard: bi-directional, automated, and context-aware translation that bridges hearing and Deaf communities.

Bringing this vision into daily life holds profound implications. A truly accessible translation system can spur advances in education, healthcare, and workplace integration by removing persistent barriers to participation and understanding. Teachers, clinicians, and employers gain a practical way to engage Deaf signers directly, using technology that “speaks” in the language most comfortable and expressive for the user. As further investment and development propel these prototypes toward commercial release, the promise of equitable, real-time communication is closer than ever—offering hope for millions to connect, learn, and thrive in a more inclusive society.

Keywords

AI sign language translation, real-time sign language interpreter, inclusive communication, smart glasses translation, speech-to-sign technology, assistive technology, accessibility innovation, sign language dialects, digital avatar translator, wearable language devices