DeepSeek V3.1 Terminus Revealed: Boosting AI Model Performance, Reliability and Agent Efficiency

DeepSeek V3.1 Terminus Update: Pushing AI Model Performance and Reliability Further

Major Improvements Unveiled in DeepSeek V3.1 Terminus

DeepSeek V3.1 Terminus, the newly enhanced version of DeepSeek's flagship AI model, marks a significant stride forward in natural language generation and autonomous agent capabilities. Building on a robust architecture, the Terminus update specifically targets previously reported inconsistencies in linguistic output. According to the development team, the latest revision has substantially reduced the accidental insertion of non-standard symbols and extraneous Chinese characters in responses. This boost in language consistency ensures more reliable communication for international and multilingual users.

The release also brings advanced agent-based task execution. Improvements in the model’s post-training optimization are evident in more effective tool usage, better multi-step task reasoning, and increased "thinking efficiency" for agent operations. With a strong focus on dynamic agent interaction, DeepSeek V3.1 Terminus empowers developers to leverage sophisticated code and search agents, streamlining project workflows and delivering faster, more accurate results across a wide spectrum of computational tasks.

Users can experience these upgrades firsthand on the official online platform, with the updated version now available for live testing. Both hybrid inference (thinking/non-thinking modes) and expanded context handling up to 128K tokens remain core features, underpinning DeepSeek’s commitment to operational flexibility and high-level integration in enterprise, research, and professional settings.

Breakthroughs in Model Architecture and Data Handling

Behind Terminus’s language precision sits a carefully refined tokenizer and template system. The new tokenizer configuration, part of the update’s technical backbone, helps minimize accidental cross-language blending and maintains formatting integrity, especially when processing large multilingual datasets. By systematically pretraining on an expanded set of documents, with long-context extensions now scaled up significantly, DeepSeek V3.1 Terminus handles vastly more complex workflows and deeper informational exchanges.

This update also reflects a substantial leap in computational resource management. Using the UE8M0 FP8 data format, DeepSeek V3.1 supports greater compatibility with microscaling hardware, optimizing for speed as well as technical accessibility. These architecture refinements offer direct benefits for developers who need both cost-efficiency and reliable output quality in real-world applications.

The open-source model weights and API upgrades demonstrate a transparent, developer-focused approach. With support for strict function calling, JSON mode, and high-capacity context windows, the platform is structured for seamless integration with modern data pipelines and AI agent frameworks. Companies and individuals concerned about data privacy and customizability can retain control by deploying DeepSeek on-premises or within highly regulated cloud environments.

Performance on Agent-Based Tasks: Setting New Standards

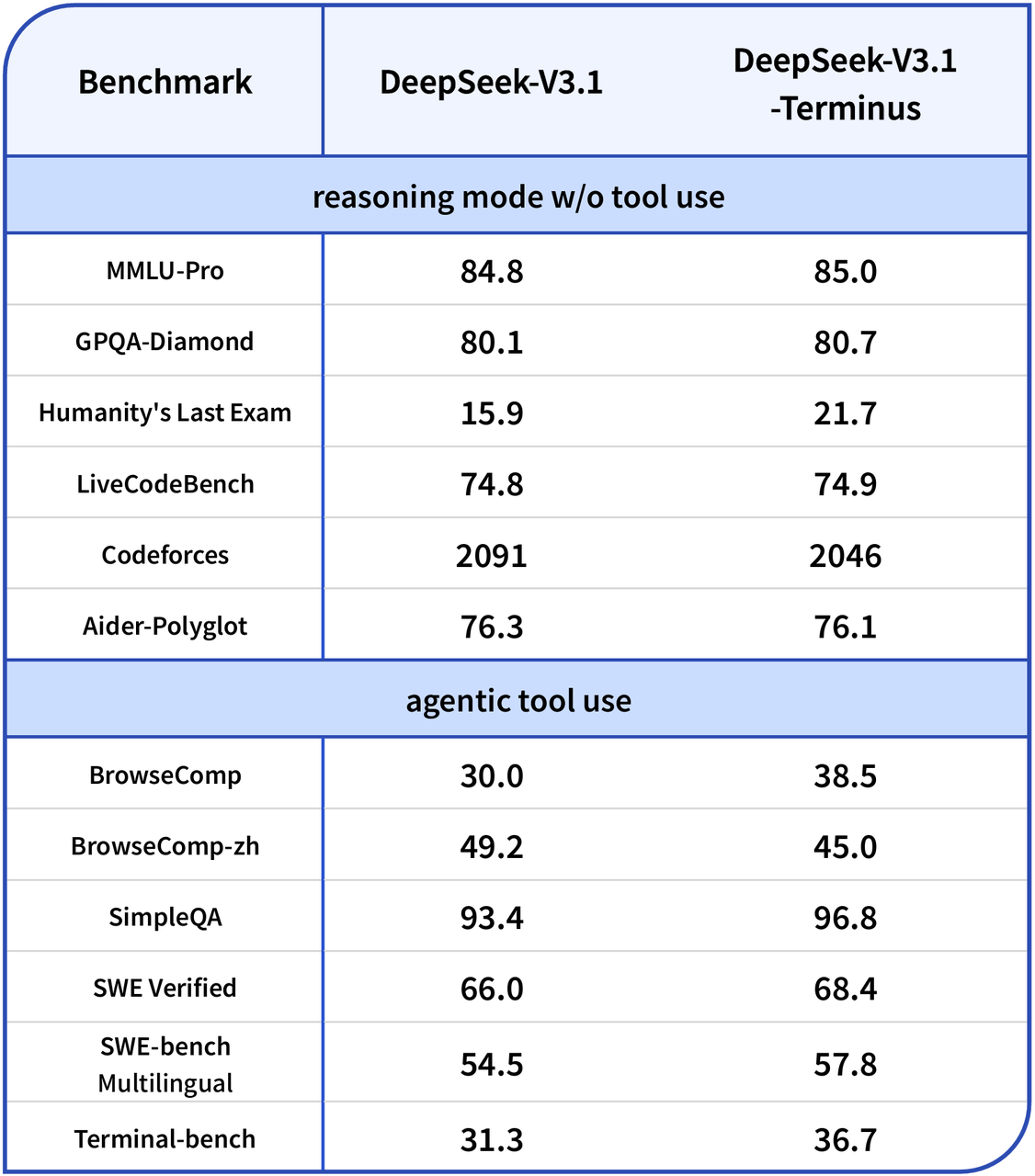

The standout improvement in this revision centers on agent-driven performance. In benchmarking tests for code and search agents, DeepSeek V3.1 Terminus shows marked gains in reasoning, coding proficiency, and context management. The refinement of its Mixture-of-Experts architecture contributes to smarter problem-solving, more accurate multi-step logic processing, and enhanced code generation abilities.

Industry feedback indicates that these enhancements directly translate to better automation, reduced manual oversight, and lower operational overheads for complex AI-driven projects. Whether in software engineering, scientific analysis, or cross-border collaborative environments, the update positions DeepSeek’s model at the forefront of AI reliability and scalability. The streamlined cost structure—now benefiting from optimized loading and lower resource draw—broadens accessibility, allowing organizations to accelerate innovation without prohibitive infrastructure demands.

In summary, the release of DeepSeek V3.1 Terminus not only upgrades technical fidelity and language consistency but also substantially raises the bar for agent task execution in real-world scenarios. With accessible live testing and transparent deployment options, it signals a new era for AI model performance, combining robust engineering with user-driven flexibility.

Key Technical Advantages and Industry Impact

DeepSeek V3.1 Terminus introduces pivotal changes that reshape expectations for large language models. Higher context capacity up to 128K tokens and superior parameter scaling allow the model to outperform many legacy systems, especially in lengthy or intricate documentation tasks. The strong emphasis on language reliability, reduced non-standard symbols, and cleaner output further cements the model’s place as a trusted resource for global deployments.

This update also reinforces the role of open-source licensing in fostering rapid innovation and global adoption. Organizations no longer need to compromise on security or custom functionality, as the platform’s flexible architecture supports tailored implementations and on-premises management. By reducing costs and license restrictions while boosting agent task proficiency, DeepSeek V3.1 Terminus sets a new standard in the AI field, aligning technological advancement with practical utility and broad market impact.